If you have only used the Wave User Interface, you may have though Overwrite was the only available operation. Each time to specify a dataset or update an existing data set with Wave, it overwrites the entire dataset with the new values.

There's a lot more to the External Data API, including the ability to append, upsert and delete data. Let's dig deeper into the capabilities of the External Data API and how to do more than just overwriting data.

External Data API

The External Data API provides the ability to upload .csv files into Wave Analytics. Along with the data file, a JSON formatted meta-data file can be provided to describe the structure of the data. If a file is not provided, the system will attempt to guess at the format of your data. Character data becomes dimensions, numeric values are measures and date/time are loaded as dates. The system provided metadata is usually close to what you want. Items like zip codes (numeric data that you want to be a dimension) and date formats (1/1/2016 - is that month/day or day/month) can be mis-mapped.

The general order of operations for using the External Data API is the following:

The general order of operations for using the External Data API is the following:

- Prepare your data and meta data files

- Connect to your org

- Insert a record into the InsightsExternalData object (more on that in a moment)

- Break your data into 10 MB chunks and upload to InsightsExternalDataPart object

- Start the upload and monitor for success

InsightsExternalData Object

The first object within the External Data API is the InsightsExternalData Object. With this object, you can specify the following- EdgemartAlias (api name of the dataset)

- EdgemartLabel (nameof the dataset)

- EdgemartContainer (app containing the dataset)

- If omitted when creating a dataset, My Private App is used

- MetadataJson (format file)

- Format (csv/zip format)

- Operation - (controls how the data is uploaded)

Let's look at operations in more detail. There are four possible options that enable you to do more than just overwrite the data.

- Append - appends all data to the dataset. Will create the dataset, if it does not exist

- Delete - deletes a row from the dataset. Must be identified with a unique key

- Overwrite - replaces the data set with the file. Will create the dataset, if it does not exist

- Upsert - inserts rows that do not exist based on a unique key, updates rows that match based on a unique key. Will create the dataset, if it does not exist

To finish up the InsightsExternalData object before going into details about the operations, there are additional fields that you can use to monitor the load process like Id, Status, StatusMessage, Dataflow and Action. As the InsightsExternalData object only has the header information about the dataset, you still need to upload the actual data. Use the InsightsExternalDataPart object to upload the data chunks.

Append, Upsert and Delete Operations

What's the best way to take advantage of these other operations? Certainly the data integration tools listed in Wave (Informatica, Dell Boomi, Jitterbit, etc.) provide support for append, upsert and delete. However, if you are not ready to jump to an integration platform and based on your use case, there are other options available.

What's the best way to take advantage of these other operations? Certainly the data integration tools listed in Wave (Informatica, Dell Boomi, Jitterbit, etc.) provide support for append, upsert and delete. However, if you are not ready to jump to an integration platform and based on your use case, there are other options available.

One of my favorite tools is Dataset Utils, a reference implementation of the External Data API by Salesforce. You can head over to the github repository to check out the source code or download the latest version. Dataset Utils is available cross platform on both Mac and Windows. The only prerequisite is the Java JDK 1.8 or higher.

Once you have installed Dataset Utils, you can run interactively with the User Interface or from the command line console. Time to drill into the details on using Dataset Utils.

Dataset Utils

The first step is to authenticate to your Salesforce instance with Dataset Utils. You can do this with your Username/Password plus Security token or rely on OAuth. Both Sandbox and Production instances are supported.

After login, you can see all the datasets in your org and search by name. Use the menu in the top right to access other functions. Switch to the File Upload Option to upload a CSV dataset.

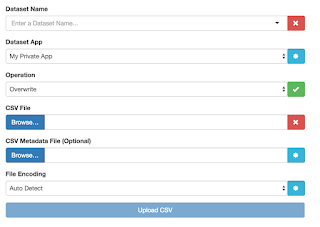

The File Upload Screen is where you can specify different options to control the upload behaviors

- Dataset Name - name for the dataset, or pick from existing

- Dataset App - select from existing Apps in wave

- Operations - append, delete, overwrite, or upsert

- CSV File - browse to the location of the file to load

- CSV Metadata File - if you have a layout file in JSON format, specify it here. Otherwise, it will be created automatically

- File Encoding - auto-detect or specify the format

Append Example

In my github repository for combining data in a wave data flow, I will load the Sales Data.csv file. As this is the first time working with the dataset, I will use the overwrite operation. The Process Monitor will refresh periodically, until the process completes.

Here is the Sales Data loaded into Wave. We have data for a couple of months in 2014.

Here is the Sales Data loaded into Wave. We have data for a couple of months in 2014.

Now it is time to use an Append operations. In the github repository, there is a Sales Data Append.csv file that has data for 2015.

When using an Append operations, its important that there is no overlap in the existing data and the data to load. Append will add the new data to the dataset, any overlapping or changed data will be duplicated.

It is also important to note that you should not have a unique identifier in your data or the append will fail.

When using an Append operations, its important that there is no overlap in the existing data and the data to load. Append will add the new data to the dataset, any overlapping or changed data will be duplicated.

It is also important to note that you should not have a unique identifier in your data or the append will fail.

Set the file upload with an Append operations, allow the load to finish and check out the results. In addition to the 2014 data, we now have 2015 values.

Upsert Example

To perform an Upsert, we need a data set with a unique key. For this example, we will use the Sales Data Identity CSV file. In addition, we will use a metadata file to specific the unique id. The full metadata file reference is documented here.

In this example, there is a Sales Data Identity.json layout file. Let's examine a unique key specification within the layout. On the ID field, the isUniqueId field is set to true.

In this example, there is a Sales Data Identity.json layout file. Let's examine a unique key specification within the layout. On the ID field, the isUniqueId field is set to true.

Now, to specify the details in Dataset Utils. Although this is the first time loading the data set, we can still use Upsert as it will create it the first time.

In Wave, we have a result similar to what we saw with the Append example. Time to work on upserting data.

There is one more data file named Sales Data Identity Insert.csv. For this set of data, I doubled the price values from April 2015 as well as added a new month of data for January 2016.

Once upserted, the lens in Wave is updated accordingly - new values for 2014 and a January 2016 appears for the first time. No need to overwrite all the data.

Once upserted, the lens in Wave is updated accordingly - new values for 2014 and a January 2016 appears for the first time. No need to overwrite all the data.

Removing rows from this data set would be as easy as loading another file with a delete operation and the identifier of the rows to remove.

Putting it to Use

Using the additional operations in the External Data API along with Dataset Utils has helped me cut data processing time significantly. In addition, I have used it to automate my load processes with the console mode and command line operations. Hopefully, you can put the power of wave to use as well.

Additional Considerations

- Be sure to check out the External Data API limits

- One important note with Upsert. You cannot change the underly dataset structure in the upsert. For example, if you want to change a field from a single-value to multi-value the upsert will fail. Instead, you will want to overwrite the entire dataset.

Thanks Again!!!

ReplyDeleteI have a new question for you: below I have the SAQL Query posted for a table in wave:

q = load "0Fb50000000LPSRCA4/0Fc50000000NiBCCA0";

q_A = filter q by 'PAYM_MOD' in ["CA", "CB"] && 'Emit_SUM_REF' > 0;

q_B = filter q by 'PAYM_MOD' in ["CA", "CB"] && 'Emit_SUM_REF' > 0;

q_D = filter q by 'PAYM_MOD' == "CA" && 'Emit_SUM_REF' > 0;

q_E = filter q by 'PAYM_MOD' == "CB" && 'Emit_SUM_REF' > 0;

result = group q_A by 'Hour' full, q_B by 'Hour' full, q_D by 'Hour' full, q_E by 'Hour';

result = foreach result generate coalesce(q_A.'Hour', q_B.'Hour', q_D.'Hour', q_E.'Hour') as 'Hour', coalesce(sum(q_A.'Emit_SUM_REF'), 0) as 'A', coalesce(count(q_B), 0) as 'B', coalesce(sum(q_D.'Emit_SUM_REF'), 0) as 'D', coalesce(sum(q_E.'Emit_SUM_REF'), 0) as 'E';

result = foreach result generate 'Hour', 'A', 'B', 'D', 'E', A/B as 'C';

result = order result by ('Hour' asc);

result = limit result 2000;

Question: the hour field is a text field so when it sorts it takes sorts 2 after 10,11,12 etc.....Is there a quick way to make it sort properly without going back to pad my entire data with a two digits ??

One quick observation is that you are not using the typical date field to hold hours. If you use Date_Field_Hours it does automatically pad with zeros.

ReplyDeleteTo change a text field, you can use something like the following. I don't have hours in my example, do I do have the floor number stored as a string. So when you get to floor 10, 11, etc., it is out of order.

You can use a case statement in the SAQL along with the len function to replace the Hours (or in my case Unit Floor) with the value padded with a zero when only 1 character or use the value as is the rest of the time.

While I am showing both the original and padded value in my SAQL, you would only want the padded version and still call it 'Hour'

q = load "DataSet";

q = group q by 'Unit_Floor__c';

q = foreach q generate 'Unit_Floor__c' as Unit_Floor__c,

(case len('Unit_Floor__c') when 1 then "0" + 'Unit_Floor__c' else 'Unit_Floor__c' end) as Padded_Unit_Floor,

count() as 'count';

q = order q by ('Padded_Unit_Floor');

q = limit q 2000;

great that worked...except I can't get it to filter with the rest of the dashboard....Anyhow thanks again!!!!

ReplyDeleteMinor problem.....I really appreciate your help!!

Hi can you help me , how to use compressed load of gzip as mentioned in documentation. i tried compressing using unix command line gzip command and then splitting files in 10mb chunks.. but it fails. Any param we need to set to let EA know we are sending in compressed data.

ReplyDelete