Friday, December 18, 2015

Top 10 Spring 16 Communities Features

Thursday, December 17, 2015

Learning About Apex Integration Services

Trailhead, the free, fun and fast way to learn Salesforce, has new content. There is a new Advanced Admin Trail that features modules on Advanced Formulas (which is a new module), Event Monitoring and Lighting Connect. Keeping with the Lightning theme, there are modules on Chatter and Data Management within the Lighting Experience.

For those Star Wars and Force (.com) fans out there, check out Build a Battle Station App project. The intro in the first step had me laughing out loud and is worth a read. Best of all, if you complete this clicks not code project by December 31, 2015, you have the chance to win awesome prizes like a Play Station 4 or Sphero Robot, check out the full details.

If you have completed the Application Lifecycle management module, you will want to check out the updates made to it. Finally, the module that caught my eye and one I want to dive into more detail is the Apex Integration Services.

Tuesday, December 1, 2015

How To Approach Manage Package Release Testing

Beta Packages

A great option in the packaging org is the ability to create beta packages. A beta package lets you create a package, install it into another org and test the package without having to commit to the components in a package. Conversely, with a managed package release version, the components are permanent. With each Salesforce release, there are additional capabilities for ISVs to remove components from released packages. However, certain components like global interfaces cannot be deleted or changed. In many cases, going right to a released version without final testing can leave you stuck with components you don’t want.Beta INSTALL org

My first step is a simple new installation test of the beta package. I have a dedicated beta test org that is dedicated for this purpose. I installed the package to verify that there are no unexpected installation problems (like the master/detail permission set issue) and to test out post install scripts.While many managed packaged do not include permission sets, I encourage you to consider adding them. A simple search of Ideas on the Success Community shows that setup hurdles with managed packages is a source of common complaint. Permission sets assist with the setup processing, making it easy to assign functionality to groups of users. While permission sets are great, it is not possible to include standard objects in permission sets for a managed package. In addition, a permission set that includes permissions on a custom object that is child in a master/detail relationship with a standard object will cause the install to fail.

My approach has been to include everything possible in a permission set that is installed with the app. While details are included in the installation guide to create the additional permission sets manually, it is not the best admin experiences. I had tried to create the permission sets as part of a post-install script, but the context that this script runs does not have permission to do this. Feel free to vote for my idea to allow this. Instead, I offer an apex class that can be installed in the org, executed to create the additional permission sets and then removed.

While performing a beta install test, I do not go through extensive post-install setup or create sample data. Any additional setup or data, must be undone to uninstall. Remember, the point is to test the install, not the entire app. You will be installing and uninstalling in this org, a lot. It is possible to install and uninstall the managed package through the API, which can be a time saver.

Beta Upgrade org

The second step in the process is to test the upgrade from the last released version of the package (betas cannot be upgraded). Here I have a second, dedicated beta upgrade org. This is installed with the latest, managed release version of the package prior to the beta. I would rather find out early in the process that there is an upgrade issue. Similar to the Beta Install Org, there is only minimal setup on the prior version. After upgrading to the latest beta, the package will need to be uninstalled to test again.To further facilitate the installation and setup testing, I am working on setup and teardown processes that will use the meta-data API to further automate the testing process. More on that in the future.

Beta Testing org

As I now know the beta package will install and upgrade, it is time for more thorough testing. It is best to start with new install testing first. I want to preserve my previously setup orgs for testing on the final beta version, where only potential upgrade issues exist. This helps to preserve more realistic data from the prior version. While I could use the Beta Install or Beta Upgrade org for this testing, it would defeat the purpose of quick and easy installs. In this org, it’s time to run the app through its paces, including testing of permission sets or profile settings with different users. Ideally, no issues are found and testing can move forward to the last step. If issues are found, its time to build a new beta version, uninstall and start testing again.Upgrade Testing

At this point, its time to test the upgrade from a prior version with full setup and data. As many package components are not upgradeable (such as page layouts, list views and reports), it is good to check out the upgrade experience as well. You can verify that upgrade notes accurately capture all of the needed changes. Saving this for last minimizes the amount of testing setup that is needed. In my case, I have a several different testing configuration to try out. Although, you may only need one depending on your app. It is worth mentioning that I have setup automated data loading to get an environment up and running quickly. All of the data needed in an org is loaded with a master script using data loader from the command line.Managed Released

One of the best benefits using a beta is moving to a released version. Uploading a new package is simply changing a radio button on the package. No package content has to be changed, which means no room for error. This ensures what was tested against is what you release. Remember, to go through and deprecate any beta packages created during testing. This will not effect any installs, but prevent any new installations from occurring with this package version. Finally, I go through one final smoke test by installing the managed package. I will do this into the Beta Upgrade org, after uninstalling the beta version. I will need the new version installed here next time. While this is probably not necessary, I don’t want the first customer experience to be a failed install.Wrap Up

With the above steps, I am confident in the app that I am releasing to customers. Let me know your thoughts and if you have a different approach to release testing. For example, if you are using a throw-away test namespace and going right to managed released version, I would enjoy hearing your results. I am always looking to make the process better and faster.Tuesday, September 22, 2015

Dreamforce 2016 Prediction - A New Learning Cloud

After another exciting Dreamforce full of new technologies like the IoT Cloud and customer success, it is a good time to reflect on what was shown and turn an eye to the future. Here is my prediction on what we will see from Salesforce in the next year.

The Past

Dreamforce 14 saw the launch of Trailhead, a new online, guided learning tool from Salesforce to help developers gain knowledge on the platform. In the year since launch, the content and capabilities of Trailhead have expanded greatly. In addition to developer trails and modules, there is content for admins, users, non-profits and ISVs.

Salesforce’s launch of the Lightning Experience was backed with extensive training in Trailhead for users, admins and developers. Finally, the Developer Zone at Dreamforce was all Trailhead all the time, complete with a cool, national parks motif and tons of opportunities for hands on learning.

The Future

I believe next year’s Dreamforce will see the launch of a new cloud – the Learning Cloud™. With the underpinning technology that drives Trailhead, it is time to make customers, partners, non-profits and all stakeholders successful in their educational efforts. Here is how each constituent will benefit from the Learning Cloud.

Customers

Have you met a customer that does not have the need to train employees? Because, I have not encountered one. The obvious application is to train employees of the Salesforce system, including specific customizations and apps. However, the Learning Cloud can do so much more. The onboarding that every new hire must complete, from HR to IT, can live in the cloud.

While I have not heard many people speak excitedly about compliance or HR training, it is time to change the experience. Do not discount the gamification aspects of earning points and badges while competing with others. Leaderboards for new hires, departments and team adds an element of fun and peer pressure to keep up with co-workers. Through integration with Work.com, a manager can setup and track progress on training goals for their direct reports. Executive dashboards in the Analytics Cloud provide insight into the organization’s skill set and corporate readiness to pursue new ventures.

Employees are only half of the equation for Salesforce’s Customers. The application for the Customer’s end Customer is extensive. Need to train operators on a new model of industrial equipment, the Learning Cloud has you covered. The same is true if you need to certify partners on your solution or service. Applications can be applied to Business to Consumer application as well.

Looking at the gamification aspects again, consumer behavior can be easily incentivized. Individualized customer journeys can be setup in the Marketing Cloud to provide a reward code, special level, or discount offer for your active participation in learning activities. As many products and services have lost their distinguishing characteristics from competitor to competitor, companies need a way to stand out. Differentiating yourself based on educational capabilities is one more way to avoid competing solely on price.

SI Partners

The System Integrator partners are in the business of implementing the various Salesforce clouds, including the development of custom applications in the App Cloud. There is no better way to complete the roll-out and onboarding phase of an engagement then having learning management built into the same platform. Whether the need is for technical or business process training delivered on-demand or in person, the Learning Cloud covers the need.

We all know how difficult change management is, especially in larger organizations. Overall training on the why, where, what, when and how of the change can be distributed in advance of go live. Additionally, help within the applications can point directly to the correct modules, providing relevant, contextual help when a user needs it. Creating this educational material, which can be updated and expanded as the solution evolves, only adds to the benefits of developing on the Salesforce customer success platform.

ISV Partners

The case is even stronger for existing partners developing apps sold on the App Exchange. With packaged application development, there is always the need to produce educational materials for administrators and end users. The old approach of writing a user manual that sits unopened on someone’s desk clearly does not drive engagement and success.

Rather than wasting time creating an unread manual, which frustrates users and the content creators, engage with focused modules including interactive content based on actual app usage. For example, analysis of usage metrics can help identify where additional training is required.

Integration with the Learning Cloud can go a step further to build compliance into the solutions. For example, if employees must successfully complete Personally Identifiable Information (PII) training before access sensitive data, the app can block access for the user until the PII module has been passed.

New Partner Opportunities

With the introduction of the Learning Cloud, new opportunities for partners will emerge. The first is for SI-like companies. These partners will be educational theory and/or content creation experts. The partners will help advise customer on how to setup effective learning modules and paths, including assessment structure to accurately measure understanding. In addition, these types of partners will help structure effective lessons and questions, as well as the graphics, videos and interactive content that make learning effective.

The second category of new partners will follow the path of current ISV partners. The Learning Cloud becomes a platform for them to reach customers that have nothing to do with Salesforce. Need to provide training to customers, these partners will have a range of Learning Management Solutions to fit your needs. Think of the current solutions provided by companies like Pluralsight or Udemy. No longer do they need to focus on the mechanics of delivering training. Instead it is about the content an making trainees successful.

Non-Profits

Salesforce is a strong supporter of improving the world through its Salesforce Foundation and 1-1-1 model. While there are many applications for the Learning Cloud in the non-profit space, a couple applications come immediately to mind.

We have an education system that is not as effective as it should be. With the Learning Cloud, we could flip how we teach students and improve outcomes. Rather than having students be introduced to topics at school and reinforce the learning at home with homework, the opposite approach becomes possible. Use modules to introduce concepts to students, including video, interactivity and initial assessment quizzes. Homework then turns into schoolwork with the supervision of the trained educator who is versed in educational theory and techniques.

If revolutionizing the educational module is too radical of an idea, applications remain with the current approach. What parent hasn’t struggled at one time or another when assisting a child with homework? Whether a refresh of the topic at hand or a better understanding of how concepts are being taught, parents can finally have a resource to help their children learn. Add in a connected Community Cloud where parents and educators can work together to ensure no child is left behind to amplify the effect.

Within the Salesforce community, there are multiple outreach groups to bring targeted populations to the platform. For example, Girls Who Code or Vet Force reach out to specific segments of the population. Part of the mission of these organization is to expose their constituents to Salesforce and how to utilize the platform. Being able to customize cotenant focused on their member’s needs furthers engagement and participation. In person learning contests and hack-a-thons are much easier to organize and deliver with a platform to facilitate the event.

Be Prepared

With a relentless focus on customer success and so much need in the learning management space, the Learning Cloud seems like a natural next step for Salesforce. For the LMS companies out there, consider this your warning. Salesforce is coming and will disrupt your market. Most of you are not even aware of the threat.

As an avid user of Trailhead since its launch in 2014, I am excited to see what the next year brings. Think about all of the content you would publish to the Learning Cloud in order to educate your stakeholders. Then patiently hold your breath for the next big thing to be announced.

Tuesday, September 8, 2015

Learning Lightning with Trailhead

My last article covered the new Salesforce Lightning Experience. Since then, I have heard quite a few questions about how to come up to speed with the new experience. As with most Salesforce related learning questions, Trailhead has to be your first stop.

My last article covered the new Salesforce Lightning Experience. Since then, I have heard quite a few questions about how to come up to speed with the new experience. As with most Salesforce related learning questions, Trailhead has to be your first stop.With the global preview on August 25, four new, Lightning-focused modules were released in Trailhead. The new modules range from the Sale Rep’s experience as an end user through everything Developers should know about Lightning.

If you are an Admin, don’t worry there are modules for you as well. If you are starting out as a new admin with Lightning, check out Starting with Lightning Experience. For admins planning a migration, Migrating to Lightning Experience helps you understand the new experience and plan a successful migration.

Tuesday, August 25, 2015

The Lightning Experience - A New Salesforce

Tonight, Salesforce announced something big - a whole new Salesforce user experience. If you missed the global preview that included live viewing parties across the world, you can check out a recording. Or keep reading to learn about the changes and what it means to you.

Salesforce has been on a three year engineering mission that started with reimagining the mobile experience in 2013 with Salesforce1 mobile and the platform in 2014 with Salesforce Lightning. This year, attention has turned to the user experience, with an emphasis on the Sales Cloud.

What's New

Simply put - a whole new user experience, a whole new way to sell. The new experience is designed so you can sell faster, sell smarter and sell the way you want. Take a look at a few sample screens from the new experience - Home page, Opportunity Pipeline Board, Opportunity Details and Dashboards.

The home page quickly displays key information that a sales rep needs to manage their day. A quarterly performance chart that shows performance towards goal. The Assistant section on the right identifies key actions to take, such as overdue tasks. The Account Insights section brings in key information about the accounts you are following.

On the left, is an omni-present navigation menu to quickly move through the app. Simply click on the three bars to expand and collapse the menu. Search is now top and center allowing quick and easy search across all of your records.

The Opportunity Board is an exciting new adding coming in Winter '16. With a new Kanban board view, you can easily see all of your open opportunities, their stages, value and key information. The totals for each stage is summarized at the top. Best of all, you can drag and drop an opportunity from one stage to another and watch it update in real time!

Drilling into an Opportunity, key information is located at the top of the page. Next Sales Path is front and center with the progress pill. Users can see what stage in the sales process and have guided selling options. Activities such logging a call, creating a task or an event are available with a few clicks. An Activity Timeline of past activity and upcoming Next Steps display below the activity section. As you can see, hovering over related objects like an Account provided additional details.

For the dashboard lovers out there, take a look at the new page in the Lightning Experience. You now have the ability to control the sizing of charts on the Dashboard, including having more than three reports! You can easily drag and drop on reports and re-size based on your needs.

What Does It Mean for You

After seeing the Lightning Experience, the most common question is how can I get it. The Lightning Experience is coming with the Winter '16 release. Inside of an Org, an administrator has the ability to enable the Lightning Experience (Setup | Lightning Experience | Enable the New Salesforce Experience).

Then you have the ability to assign the Lightning Experience User to either a profile or permission set. Keep in mind that standard profile will automatically receive this permission, but you will have to add it to custom profiles. This enables a phased roll-out to improve adoption rates and manage change.

With this release, the Sales Cloud is the focus for the new experience. So if you are using Service Cloud, you will still use the classic experience. In addition, there are exclusion from the new experience such as the Orders object. Finally, if you are using Person Account, the new experience is not supported, yet.

No need to worry if you are not ready to migrate. The classic user interface will be supported for some time.

How to Learn More

There are numerous resources available where you can learn more about the Lightning Experience. Check out the following resources:

- Watch the Global Preview recording

- Check Out Blogs

- Salesforce Success Community

- Groups to collaborate around Lightning Experience

- Upcoming webinars to discuss the changes

- Lightning Design System

- Trailhead

- Sales Reps - Using Lightning

- Admin - Starting with Lightning

- Admin - Migrating to Lightning

- Developer - Lightning Experience

- Sign up for a Winter '16 Pre-Release Org and try it out

- Contact us to learn more

Wednesday, August 5, 2015

Trailhead: One Year (Almost) Later

Trailhead is Born

Since its launch with three trail trails and a handful of modules, it seems nothing can stand in the way of Trailhead’s continued growth. While its initial focus was on developers, the most recent update this week eliminates any doubt that Trailhead is the learning platform for all. Newly released modules and trails cover CRM Basics, Accounts & Contacts and Leads & Opportunities. While prior updates have included Administrator focused content as well Beginner and Intermediate, this update seems to be the start of something more.

Learning for All

Looking forward, I would not be surprised to see more content focused across a wider audience within Trailhead. The first hints of this come with the new Dreamforce Trail which helps you get ready for Dreamforce ‘15. In a year from now, we will probably be talking about Trailhead being the one stop shop for learning everything about Salesforce and bemoaning how tough life was BT (Before Trailhead).

My Trailhead Addiction

I have to admit, I am a certified Trailhead addict and try to jump on the modules as soon as they come out. There are a couple of mobile modules and project that I have yet to complete. However, I have blazed all of the other trails, including the the new modules that were recently released. Let’s take a deeper look at a module that I particularly enjoyed in the new release – Event Monitoring.

Trailhead’s Event Monitoring Module

The Spring ‘15 Release introduced a new Event Monitoring API that enables access to the same information Salesforce has to understand how your Salesforce Org is being used. Access to the insight in these events makes it easy to identify abnormal patterns and help secure your data. The module has three challenges to it.

The Spring ‘15 Release introduced a new Event Monitoring API that enables access to the same information Salesforce has to understand how your Salesforce Org is being used. Access to the insight in these events makes it easy to identify abnormal patterns and help secure your data. The module has three challenges to it.

The first is Getting Started with Event Monitoring, which provides an overview of the feature, what can be tracked as well as several use cases for event monitoring. Event monitoring provides tracking for over 29 different types of events including:

- Logins

- Logouts

- URI (web clicks)

- UI (mobile clicks)

- Visualforce page loads

- API Calls

- Apex executions

- Report exports

With all this detailed information at your fingertips, on possible use case is to monitor for data loss. For example, when a sales person leaves, use event monitoring to look for any abnormal report export activity to prevent your customer list from landing with a competitor.

Query Event Log Files is the second challenge in the module. Once you have the correct permissions to use event monitor, head over to Workbench to use the SOQL Query Editor or REST Explorer to take a look at events in your org. While both SOAP and REST APIs exist to query event log file, there are key differences which the unit helps explain. I especially like the REST examples and results presented in Trailhead. With REST you can query for a Log File ID and then return a CSV of the results.

/services/data/v34.0/query?q=SELECT+LogFile+From+EventLogFile

/services/data/v34.0/sobjects/EventLogFile/<id>/LogFile

The last step to earning your Event Monitoring Badge is the Download and Visualize Event Log Files challenge. There are multiple methods to downloading Event Log files, including a browser app (check out the module for full details). Once you have the data in hand, you can use your favorite tools to analyze and chart trends over time.

While I have no doubt Excel and Google Docs will be popular choices, I love the option to push the data into the Salesforce Analytics Cloud. You have the benefits of a platform built to explore data and unlock insights. Best of all, as another Salesforce cloud, it is easy to share results with the rest of the team. For example, I pulled out the event log for API calls, loaded them into the Analytics Cloud and reported occurrence by IP address.

What Will You Learn Next

By now, I hope you are as excited as I am to learn something new. Head on over to Trailhead, pick a module or trail and expand your knowledge today.

Saturday, August 1, 2015

My IoT Adventure

I recently ordered a set of littleBits, to get the kids involved with some basic circuitry (need to put the old computer engineering degree to use now and then). I couldn’t resist the cloudBit kit, with its ability to connect what we built to the internet. It also helped that the Bit Olympics were about to begin. A three week competition to improve sports through technology was a perfect opportunity to share our project with a community.

I recently ordered a set of littleBits, to get the kids involved with some basic circuitry (need to put the old computer engineering degree to use now and then). I couldn’t resist the cloudBit kit, with its ability to connect what we built to the internet. It also helped that the Bit Olympics were about to begin. A three week competition to improve sports through technology was a perfect opportunity to share our project with a community.

The sport we selected for our project was ice hockey. Previously, the kids and I had worked on a Lego model of our minor league hockey team. A new area had been built and the puck dropped on the inaugural season for the Lehigh Valley Phantoms last fall. After attending a game, my son was set on building a replica of the PPL Center out of Legos. Since there is not a packaged set for this, it was a free build extraordinaire.

With our medium set, it was on to building out our littleBits circuit. There are certain traditional aspects of sports that should not be messed with. In our case, the horn and red light when a goal is scored. We kept those in place with a long led and buzzer from the littleBit collection. However, we improved it with the cloudBit. With a motion trigger in the goal, followers receive a text whenever a goal is scored. This is accomplished with a a simple IFTTT recipe that we wrote as the standard library did not have what we wanted.

You can check out our complete entry or the video of the working project.

Check out all the entries a the projects site, as well as the grand bitOlympian and other winners. So I can now say I have officially arrived on the Internet of Things.

Tuesday, July 14, 2015

What’s New in Salesforce Wave Summer '15 Plus Release

Analytics Playground

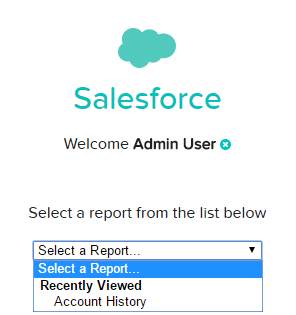

If you have tried the Analytics Playground yet, now is a great time to try it out. You can go through a tutorial and try out Analytics Cloud features. Best of all, you can even start exploring your own data. This can be an Excel or CSV file you upload or a Google doc you connect to. Now, you can even connect to your Salesforce org and select an available report that you have created. Nothing compares to analyzing your own data in the playground!

If you have tried the Analytics Playground yet, now is a great time to try it out. You can go through a tutorial and try out Analytics Cloud features. Best of all, you can even start exploring your own data. This can be an Excel or CSV file you upload or a Google doc you connect to. Now, you can even connect to your Salesforce org and select an available report that you have created. Nothing compares to analyzing your own data in the playground!Wave Dashboards in Salesforce

Additionally, there is now a <wave:dashboard></wave:dashboard> tag that can be embedded in Visualforce pages. For example, display a dashboard on the home page with the following,

<wave:dashboard dashboardId="{dashboard id}" height="400px"></wave:dashboard> in a

Quick Actions in Lenses & Dashboards

Post to Chatter

Chatter is also included in the integration party. From a Wave dashboard, a Chatter post can be created that includes both the dashboard image and a link to the dashboard. Users can access the image within Chatter to see the dashbaord details and then us the link to dive into the analysis.

Sales Wave (Pilot) & Service Wave (Limited Pilot)

As getting started with Analytics can be daunting to many, Salesforce is releasing targeted templates to get you started. These templates contain the components needed to answer common questions. The Sales Wave App gets you running in about the time it takes to get a coffee. After answering a handful of questions presented in a wizard like interface, an Analytics Cloud App is created along with the datasets, data flows, lenses and dashboards to analyze the sales side of your business from any device.Sales Wave enables you to review and analyze your Forecasts, Pipelines, Team Coaching and an overall Business Review. All of the topics are personalized based on the questions you answered. For example, if you pick Industry as your primary method of Account segmentation, it is an available filter in the dashboard. Best of all, the data is refreshed daily which lets you keep a pulse on your business. A similar, but much more limited pilot, is underway to pull in data from the Service Cloud for analysis.

Limit Changes

Summer ‘15 Plus included increases that allow additional data loading. The maximum number of dataflow jobs in a rolling 24 hours has been increases from 10 to 24. This is a welcome improvement, as both failed and successful jobs count towards the limit. When working to get a data extract right, I have hit this 10 data load limit before. Additionally, the maximum number of external data uploads in a rolling 24 hour period has increased from 20 to 50.

Several new or changes limits also come with Summer ‘15 Plus. First, there is a maximum amount of external data to load, which is set to 50 GB. Finally, the maximum length for dataset field names based on a CSV file has been decreased to 40 characters from 255. This is a important change to take note of, as it can affect your datasets.

SAQL Enhancements

For those of us that push the Analytics Cloud to do more by writing SAQL, take note of the Use More Robust Syntax feature. This release introduces more robust syntax checking to prevent errors when executing SAQL code, which is a nice improvement. However, the changes may break existing SAQL code, which requires you to review and update your code. Be sure to check out the release notes for full details of what has changed. All of the breaking changes are called out.

- min() and max() functions only take measures as arguments

- Can't group a grouped stream

- Can't reference a pre-projection ID in a post-projection order

- Can't have two consecutive order statements on the same stream

- Quotations mark rules applied consistently

- Changes to foreach statement

- count() function takes grouped source stream as an argument

- SAQL queries must be compositional

- Filtering an empty array returns and empty result

- Explicit stream assignment required

- Out of order range filter returns false

What’s Next

With all the new features in Summer ‘15 Plus, it’s exciting to be working with the Analytics Cloud. I am excited to see tighter integration with Salesforce. Now when an analysis finally finds the data in need of attention, you can quickly and easily take action. What’s your favorite new feature?

Tuesday, July 7, 2015

Salesforce Certification Changes

You may have heard about new Salesforce certifications. If you are curious about what it coming, I will break down the details. In summary, new certifications are on the way in the Marketing and Developer areas. Let’s start with the changes in the Developer area first.

Current Developer Certifications

When I first started working with Salesforce, I found the concept of a Developer somewhat confusing. When working with other technologies, Java, Microsoft.Net, Oracle, etc., a Developer was always someone who programmed by writing code. While this is true in the Salesforce world, there is also the ability to create something new without programming. Other platforms do not have the concept of declarative development, so this is unique.

Looking across other platforms, Administration universally refers to the process of running a system. It does not refer to creating something new, as declarative development enables. So declarative development would not fall under Administration.

From the Salesforce perspective, it makes perfect sense to combine both declarative and programmatic development under the Developer umbrella. Looking at the current certification tracks, becoming a Certified Developer exam covers the declarative aspect of the platform and does not include any programming. This certainly creates confusion for those outside the Salesforce world, and even some within.

While Advanced Developer does cover the programmatic aspects of the platform, it presents its own challenge. First, Advanced Developer is not a widely held certification, yet is the only representation of programmatic development skills. One of the hurdles to certification is the programming exam, which is only offered four times a year for up to 200 participants as the assignment is hand scored by multiple evaluators. This leads to a significant backlog of candidates and multiple months of wait time.

Compounding the problem, Salesforce has not even offered the programming assignment since December 2014. (Yes, I am keeping track as I passed the exam in early December and have been waiting ever since.) All of these factors creates confusion in the marketplace around what is a Developer and the skills they posses.

New Developer Certifications

With three new certifications, Salesforce is acting to remove all confusion. First, Salesforce Certified App Builder bridges the gap between an Administrator and a programmer. It covers designing, building and implementing new custom applications using the declarative aspects of the platform.

Next, two programmatic certifications cover basic and advanced levels of programming skills. Salesforce Certified Platform Developer I is meant to show ability to extend the platform through Apex and Visualforce. Salesforce Certified Platform Developer II is all about going deep with the programming on the platform, including advanced topics.

More details on each can be found at on Salesforce’s site. App Builder and Developer I are currently going through beta testing and should wrap up around July 10. Unfortunately the beta program is full for these exams. Certified Platform Developer II will have beta exams starting at the end of July. It appears invitations are going to those who currently hold Advanced Developer Certification. Hopefully, we will hear more details on general availability in August or Dreamforce ‘15 at the latest.

Marking Cloud Certifications

At the end of last year, Salesforce introduced Pardot Consultant certification. While Pardot has since been moved under Sales Cloud, it still is all about Business to Business Marketing Automation. Now Salesforce is expanding certifications to the Marketing Cloud. Two new certification tracks have been announced within the Marketing Cloud, focused on users that build, manage and analyze either Emails or Social within the Marketing Cloud. While there are overview details on the certification site, the study guides and recommend online trainings have yet to be announced.

Future Certifications Predictions (Safe Harbor)

If I look into my crystal ball, I don’t think this is the end of the new certifications from Salesforce. Based on my Analytics Cloud Brown Belt Accreditation training in April, there were indications of a future certification in this area. I would also not be surprised to see a user focused certification within Pardot, just like with the Marketing Cloud.

Finally, the Certificated Technical Architect track has similar obstacles as the Advanced Developer. A low number of certified individuals, long wait times for review boards and from what I have heard relatively low pass rates. If we apply the changes from Advanced Developer to Platform Developer, a Technical Architect certification path with multiple levels and steps along the way would make sense and resonate with the market.

Personally, I look forward to putting my skills to the test with these new certifications once they are available and will be sure to share my test taking experiences.

Wednesday, June 24, 2015

Salesforce Analytics Cloud Explained

Salesforce made a splash at Dreamforce ‘14 with Wave and there has been a lot of excitement on topic since then. Couple frequent releases of new Analytics Cloud innovations with limited access to a developer environment means confusion still persists.

Analytics Cloud vs. Wave

A good place to start is the difference between Analytics Cloud and Wave. While many times, these terms have been used interchangeable, there are key differences. Wave is the platform while Analytics cloud is the product. A good analogy to draw is Sales Cloud and Service Cloud are products that run on the Salesforce 1 Platform. So let’s take a look at the features of each.

Wave

As the underlying technology platform, Wave is a schema free, non-relational database. Consisting of columnar based key-value pairs, Wave relies on search based technologies and inverted indexes to quickly deliver results. While that’s a lot of big data buzz words, what it means is Wave it not like your traditional data warehouse. It is built to handle large volumes of structured and unstructured data to quickly provide results based on search queries. If you would like to learn more, take a look at Redis, which a data structure server that will help you better understand Wave.

Analytics Cloud

As the flagship product on Wave, Salesforce Analytics Cloud is a mobile-first solution that allows data to be sourced from multiple locations, manipulated in real-time, and shared with other users. The key elements the Analytics Cloud delivers are the following:

- Explore – allows everyone, not just data analysts, to find answers in data

- Collaborate – one place for users to get and share answers on the business

- Cloud – as a native cloud solution, it is up and running quickly, easily scales and one source of the truth

- Mobile – all the answers you need in the palm of your hand

Learning More

To learn more about the Salesforce Analytics Cloud, check out the following resources. As part of a recent Lehigh Valley Salesforce Developer User Group, I explained the Salesforce Analytics Cloud, including a demonstration of the technologies. The presentation can be found on Slide Share while a recording of the session is available on YouTube.

Monday, June 15, 2015

Setting Up Lightning Connect in Salesforce

External Data Source

First an external data source needs to be setup in Salesforce. This is done from Setup with the following steps. If you would like to learn more on Lightning, check out the Lightning Webinar Series.- Go to Setup | Develop | External Data Sources and click New Data Source.

- Provide a label and a name. I used DynamicsAX for both values

- From Type, select Lightning Connect: OData 2.0

- Take note of the 2.0, as this is the minimum OData version that Lightning Connect supports

- There may additional options, especially if in a Summer '15 or higher org, which introduced the ability to create Lightning Connect Custom Adapters

- After selecting this option, additional Parameters are available

- URL - the Dynamics OData Source in the form of https://<host>.<domain>:8101/DynamicsAx/Services/ODataQueryService/

- A series of options, which the default values are okay

- Format - our source is AtomPub

- Special Compatibility - Default is appropriate

- Authentication details for our source must be specified

- Identity Type - Name Principal to specify a static username/password to Dynamics AX

- Authentication Protocol - Password Authentication

- Supply the Username and Password to use to connect

- As we are providing credentials, our URL must be https

- After Saving, there is an option to Validate and Sync

- Salesforce has made quite a few improvements between Spring '15 and Summer '15 in this area.

- When testing in Spring '15, I had cases where no error messages were displayed or only generic messages.

- After the Summer upgrade, I saw more consistent messages with additional details to help troubleshoot.

- Ensure you have a valid SSL certificate, or you will not be able to validate and sync

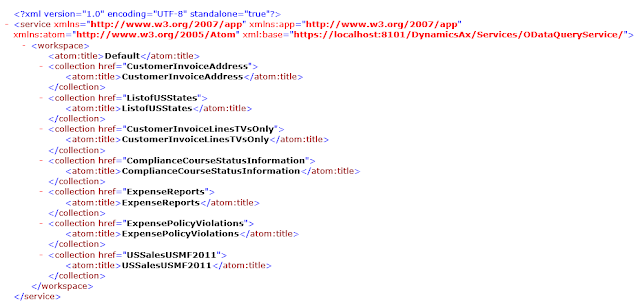

- A list of OData tables is presented to sync. In my case, I selected ListofUSStates. This is a simple listing of the US States and Names used in Dynamics.

External Objects

Custom Tab

Success…?

We Have a Problem

First stop, was OData.org to check out the spec. DataServiceVersion is defined in the Service Metadata Document and indicates the version of OData used. So now to inspect the metadata for our Dynamics AX source. This can be done by adding /$metadata to the end of our base URL.

Conclusion

At this point, I am reasonably certain Dynamics AX 2012 is OData version 1.0 and Lightning Connect requires 2.0. Just to leave no stone uncovered, time to call in the big guns and verify with Salesforce Product Management. After taking a look at my setup, they reached the same conclusion that Dynamics AX 2012’s OData 1.0 version was not supported.What I did learn from the Product Management team is that Summer ‘15 loosened some of the restrictions on OData 1.0 implementations. Rather than immediately excluding the service based on the version, Lightning Connect will attempt to use the service. Only if the service does not support the necessary conventions, like $select, will it fail. This can be useful in cases where DataServiceVersion is not specified, as the OData spec indicates that version 1.0 should be assumed.

While I did not end up with Dynamics data available in Salesforce via Lightning Connect, I did learn a lot along the way. Once you have an OData source available, setup in Salesforce is fast and easy. I am looking forward to the next attempt to use Lightning Connect. Besides, there are a lot of other ways to get Dynamics data into Salesforce.

Monday, June 8, 2015

Dynamics AX Setup for OData Integration with Salesforce

Environment Setup

Obtaining a Dynamic AX demo environment was easy. With Microsoft Lifecycle Services, it was just a few steps to be up and running.- Log into Life Cycle Services

- Create a project

- Connect to an Azure subscription (I used a 30 day free trial)

- Deploy an Dynamics AX demo environment on Azure

- Log in via Remote Desktop and open the Dynamic AX Client

DYNAMICS AX and ODATA Service

With Dyanmics AX, OData service is available out of the box with access to the queries setup in AX. It is accessible via http://<host>.<domain>:8101/. This provides a list of the available queries that can be called, by adding the name to the end of the URL. For example, get a list of all US states via https://<host>.<domain>:8101/DynamicsAX/Services/ODataQueryService/ListofUSStates.You are required to authenticate to the service first to ensure you are authorized to access the specified resources. Now the astute reader may notice the issue we will have with Salesforce. As we are authenticating to the AX OData Service, we must specify a secure URL when setting up our External Data Source within Salesforce

ENABLING HTTPS for Dynamics AX Odata

As you will see the actual changes needed to enable HTTPS are not that extensive. However, I could not find a clear set of instructions, so good old fashion research and trial and error lead to to the following setup tasks.First some background, the AX OData Services is a Windows Communication Foundation (WCF) app that runs as a service - the Ax32Serv.exe. While some WCF apps run inside of Internet Information Services (IIS), this one is stand alone. As such, the setup is not done inside of IIS.

SSL Certificate

A SSL certificate is needed in order to communicate on HTTPS. If you have a certificate that matches the final domain you will deploy the OData Service to, use it now. If not, you can create a self-signed certificate. There instructions are for IIS7 and a quick web search will bring up other options. When attempting to configure Lightning Connect in Salesforce, a self-signed certificate will not be trusted and the connection will not work, but it gets us through the first step.Port Binding

Once you have your SSL cert, we need to configure port 8101 to use it. Full details on how to do this can be found in this article - Configure a Port with an SSL Certificate. The summary is:- Check the port configuration from command line

- netsh http show sslcert ipport=0.0.0.0:8101

- No results indicates nothing is bound

- Get the SSL certificate's thumbprint from MMC

- Bind the SSL cert to the port from command line

- netsh http add sslcert ipport=0.0.0.0:8101 certhash=<tumbprint> appid={00112233-4455-6677-8899-AABBCCDDEEFF}

- Use the check command again to verify the bound cert

Setup URL Reservation

- Check the existing reservation. There is likely a HTTP reservation already in place

- netsh http show urlacl url=https://+:8101/DynamicsAx/Services/

- Be sure to include the trailing /

- No results means there is not a reservations.

- Delete any existing reservation

- netsh http delete urlacl url=https://+:8101/DynamicsAx/Services/

- Add a new reservation with HTTPS

- netsh http add urlacl url=https://+:8101/DynamicsAx/Services/ user=CONTOSO\admin

- Be sure to change the user to the user that runs the service

- Use the check command to verify the reservation

Ax32Serv.exe Config File Changes

- Find the <services> section and look for the service name="Microsoft.Dynamics.AX.Framework.Services.ODataQueryService.ODataQueryService"

- Update the address of the endpoint to be https instead of http

- The service definition references a behaviorConfiguration="ODataQueryServiceBehavior"

- Locate this behavior name in the <behaviors> section

- Change httpGetEnabled to httpsGetEnabled

- Change httpGetURL to httpsGetURL and modify the address to be https instead of http

- The service definition references a binding="webHttpBinding" and binding configuration="ODataQueryServiceBinding"

- Location this binding in the <bindings> section

- Change the mode in <security> from "TransportCredenialOnly" to "Transport"

- Change the clientCredentialType from "Windows" to "Basic"

- I removed the proxyCredentialType="Windows" attribute as well

Firewall and Azure Endpoint Management

Verifying Locally

| https://<instance>.cloudapp.net:8101/DynamicsAx/Services/ODataQueryService/ Again, you will need to click through the certificate error and supply credentials. You can also add the self-signed certificate to your local trusted certificate authority certificate store. Open a new Excel workbook, go to Data -> From Other Sources -> From OData Data Feed. Supply the URL, credentials and click next. Pick your table, I used ListofUSStates to keep it simple. Finish to save the data source, select a location in the sheet and your Dynamics AX data is now in Excel. Here is an example list of states from Dynamics pulled in Excel. Lightning Connect Preparation |

Thursday, May 14, 2015

Trailhead: New Modules and Guided Learning

![]() New options for learning Salesforce emerge all the time. As an update to my earlier article on How to Learn Salesforce, Trailhead has been recently updated with new modules. There is now a set of modules covering the new Lightning technologies – Connect, App Builder, Components and Process Builder.

New options for learning Salesforce emerge all the time. As an update to my earlier article on How to Learn Salesforce, Trailhead has been recently updated with new modules. There is now a set of modules covering the new Lightning technologies – Connect, App Builder, Components and Process Builder.

These are a great set of modules if you want to get your hands dirty with guided practice. I especially liked the Lightning Connect module. The idea of being able to connect to external sources with OData is intriguing. However, from a practice standpoint without an OData source, it is hard to try it out. This module solves that issue by giving you a source to connect with. The best part is once you finish the exercises, you can experiment on your own.

Another new module, is Reports and Dashboards. I have always had an affinity for reporting and analytics, as it is where I started my professional career. One of the challenges with trying to learn reporting, is that of context. You can learn the mechanics of a tool, but without business requirements it lacks substance. The Reports and Dashboard module helps provide background, making the learning more meaningful.

Perhaps the most exciting development is guided learning paths to blaze your own trail. When Trailhead was just a few modules, it was easy to pick and choose what you want. Now with more content, finding what you are looking for can be more difficult. If you are a new admin, sifting through the more advanced developer options can be a challenge. Now there’s no worries, as Guided Paths exists for the following:

With each track you get an overview of the course time remaining and the points you have earned. Personally, I have made it through most content with a couple of modules left in the Intermediate Developer track. I am also excited about what may come. Looking and the track naming and interjecting a little bit of speculation, I am looking forward to the advanced topics for Admins and Developers. What have you enjoyed most about Trailhead?

Sunday, May 10, 2015

Loading Analytics Cloud Directly from Excel

Since my previous post on Combining Salesforce and External Data, it is now even easier to load the example sales data. With the release of Salesforce Wave Connector for Excel, no longer do you need to export data to a comma separated value (csv) file first. Let’s take a look at using the connector to directly populate Salesforce Analytics Cloud from our Excel file.

Salesforce Wave Connector foe Excel Installation

First, you want to add the Salesforce Wave Connector for Excel from the Office store, which is a free install. Alternatively, you can install from directly within Excel. Using Excel 2013, my steps were the following:

- Go to the Insert tab

- Click Apps for Office, See All, then Featured Apps

- Search for Wave Connector, Select the result and click Trust It

- A side-bar opens, and you supply your Microsoft account details

With the Wave Connector installed, it’s time to specify the Salesforce credentials to your Analytics Cloud environment. You must also grant permission to the Wave Connector, like any OAuth app. You can check out the help page if you need additional details on installing the connector.

Uploading Data from Excel

Now we need to specify the Excel data to load. Simply select a range of columns and rows and provide a name to use in Analytics Cloud for your dataset. You can also review the number of Columns and Rows (32,767 is the row limit per import) as well as preview the Column Names and Data types.

If you want to change a data type, look for a column that is hyperlinked. Clicking on it will cycle through the supported data types (text and date for dimensions and numeric for measures)

Then simply click submit data and the magic happens. The upload progress will be reported and a summary of columns and rows displayed. You can then jump to Analytics cloud or Import Another Dataset.

Success

Head over to your Analytics Cloud environment and look for your new dataset. You may have to refresh the page if it takes a few minutes for the import to complete. Our new SalesDataGenerator dataset is now ready to be analyzed through lenses and dashboards.

Additional Notes

- If you need to change Analytics Cloud environment, use the drop down next to your login in the Wave Connector for Excel to Log Out and supply new credentials

- Administrators, take note of additional steps if you are using Office 365, outlined in the help files

- Use the ? next to Get Started in the Wave Connector for Excel for additional details and troubleshooting tips. Excel in Office 365 users need to remember to Bind Current Selection when uploading data

- Remember you are limited to 20 datasets per day from external sources, and each upload from the Wave Connector for Excel counts towards this limit