Dashboards need to be a visual representation of the user’s business goals, with insights that are actionable. Both the art and the science are required to create a meaningful dashboard that engage users. Here are 5 steps to building killer Einstein Analytics dashboards that users will love.

Tuesday, October 16, 2018

5 Steps to Killer Einstein Analytics Dashboards

Dashboards need to be a visual representation of the user’s business goals, with insights that are actionable. Both the art and the science are required to create a meaningful dashboard that engage users. Here are 5 steps to building killer Einstein Analytics dashboards that users will love.

Monday, March 19, 2018

Adding Subtotals to Einstein Analytics Table

Tuesday, August 1, 2017

Analytics Explained - Compare Table Unleashed

I've created an unmanaged package that explores how to unlock the full power of the compare table, no SAQL coding required. Learn how drive better insights in Einstein Analytics through the following:

- Building killer dashboards without writing code

- Creating advanced compare table charts, such as timeline charts with separate lines by year

- Tips and tricks for creating formula columns and using functions

- Leveraging advanced functions for rankings, period over period changes and running totals

- Strategies to handle null values and turn 'No Results Found' into zeros

Monday, April 24, 2017

Summer 17– Hottest Analytics Cloud Features

As the weather heats up, thoughts turn toward Summer. After reviewing the release notes and working in a pre-release org, here are the hottest features coming in the Analytics Cloud Summer 17 release.

As the weather heats up, thoughts turn toward Summer. After reviewing the release notes and working in a pre-release org, here are the hottest features coming in the Analytics Cloud Summer 17 release.Wednesday, April 12, 2017

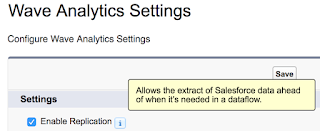

Salesforce Wave Explained - Replication

Salesforce Analytics Cloud includes a replication feature that will pre-populate Wave with Salesforce data. Replicated data can greatly improve dataflow execution times.

Enabling replication also consolidates multiple digest statements in dataflows into a single extract. If you have used the Dataset builder and selected the same object more than once, your dataflow will digest that object multiple times.

Simply check the option and away you go....well almost. There are a few considerations to take into account when using replicated data. Here's what to keep in mind as well as a list of my Tips & Tricks.

Tuesday, March 21, 2017

Salesforce Wave Explained - Independent Chart Comparison

While this may seem like a daunting request at first, it's actually quite simple to build this dashboard. In fact, it can be done without any SAQL and only a few lines of JSON. Here is how to build it.

Monday, June 27, 2016

Salesforce Wave Explained - Cogroup

Wednesday, January 13, 2016

A Better Way to Manage JSON and SAQL in Analytics Cloud

It’s really easy to get started with Analytics Cloud. Load an internal or external dataset, create a couple of lens, clip to a dashboard and relish in the insights. Now to get the most out of Wave, you have to dive under the covers and work with JSON and SAQL (Salesforce Analytics Query Language).

It’s really easy to get started with Analytics Cloud. Load an internal or external dataset, create a couple of lens, clip to a dashboard and relish in the insights. Now to get the most out of Wave, you have to dive under the covers and work with JSON and SAQL (Salesforce Analytics Query Language).Previously, this meant spinning up your favorite editor, digging through the documentation and a lot of copy and paste. Thanks to the innovation coming from Salesforce Labs, there is a new tool that enables inline editing of Metadata/JSON as well as SAQL. Let’s take a look and see how much time you can save.

Tuesday, July 14, 2015

What’s New in Salesforce Wave Summer '15 Plus Release

Analytics Playground

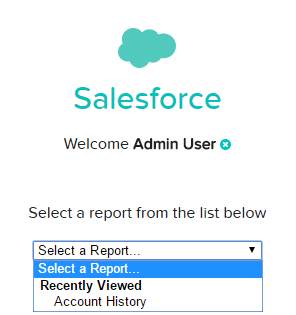

If you have tried the Analytics Playground yet, now is a great time to try it out. You can go through a tutorial and try out Analytics Cloud features. Best of all, you can even start exploring your own data. This can be an Excel or CSV file you upload or a Google doc you connect to. Now, you can even connect to your Salesforce org and select an available report that you have created. Nothing compares to analyzing your own data in the playground!

If you have tried the Analytics Playground yet, now is a great time to try it out. You can go through a tutorial and try out Analytics Cloud features. Best of all, you can even start exploring your own data. This can be an Excel or CSV file you upload or a Google doc you connect to. Now, you can even connect to your Salesforce org and select an available report that you have created. Nothing compares to analyzing your own data in the playground!Wave Dashboards in Salesforce

Additionally, there is now a <wave:dashboard></wave:dashboard> tag that can be embedded in Visualforce pages. For example, display a dashboard on the home page with the following,

<wave:dashboard dashboardId="{dashboard id}" height="400px"></wave:dashboard> in a

Quick Actions in Lenses & Dashboards

Post to Chatter

Chatter is also included in the integration party. From a Wave dashboard, a Chatter post can be created that includes both the dashboard image and a link to the dashboard. Users can access the image within Chatter to see the dashbaord details and then us the link to dive into the analysis.

Sales Wave (Pilot) & Service Wave (Limited Pilot)

As getting started with Analytics can be daunting to many, Salesforce is releasing targeted templates to get you started. These templates contain the components needed to answer common questions. The Sales Wave App gets you running in about the time it takes to get a coffee. After answering a handful of questions presented in a wizard like interface, an Analytics Cloud App is created along with the datasets, data flows, lenses and dashboards to analyze the sales side of your business from any device.Sales Wave enables you to review and analyze your Forecasts, Pipelines, Team Coaching and an overall Business Review. All of the topics are personalized based on the questions you answered. For example, if you pick Industry as your primary method of Account segmentation, it is an available filter in the dashboard. Best of all, the data is refreshed daily which lets you keep a pulse on your business. A similar, but much more limited pilot, is underway to pull in data from the Service Cloud for analysis.

Limit Changes

Summer ‘15 Plus included increases that allow additional data loading. The maximum number of dataflow jobs in a rolling 24 hours has been increases from 10 to 24. This is a welcome improvement, as both failed and successful jobs count towards the limit. When working to get a data extract right, I have hit this 10 data load limit before. Additionally, the maximum number of external data uploads in a rolling 24 hour period has increased from 20 to 50.

Several new or changes limits also come with Summer ‘15 Plus. First, there is a maximum amount of external data to load, which is set to 50 GB. Finally, the maximum length for dataset field names based on a CSV file has been decreased to 40 characters from 255. This is a important change to take note of, as it can affect your datasets.

SAQL Enhancements

For those of us that push the Analytics Cloud to do more by writing SAQL, take note of the Use More Robust Syntax feature. This release introduces more robust syntax checking to prevent errors when executing SAQL code, which is a nice improvement. However, the changes may break existing SAQL code, which requires you to review and update your code. Be sure to check out the release notes for full details of what has changed. All of the breaking changes are called out.

- min() and max() functions only take measures as arguments

- Can't group a grouped stream

- Can't reference a pre-projection ID in a post-projection order

- Can't have two consecutive order statements on the same stream

- Quotations mark rules applied consistently

- Changes to foreach statement

- count() function takes grouped source stream as an argument

- SAQL queries must be compositional

- Filtering an empty array returns and empty result

- Explicit stream assignment required

- Out of order range filter returns false

What’s Next

With all the new features in Summer ‘15 Plus, it’s exciting to be working with the Analytics Cloud. I am excited to see tighter integration with Salesforce. Now when an analysis finally finds the data in need of attention, you can quickly and easily take action. What’s your favorite new feature?

Wednesday, June 24, 2015

Salesforce Analytics Cloud Explained

Salesforce made a splash at Dreamforce ‘14 with Wave and there has been a lot of excitement on topic since then. Couple frequent releases of new Analytics Cloud innovations with limited access to a developer environment means confusion still persists.

Analytics Cloud vs. Wave

A good place to start is the difference between Analytics Cloud and Wave. While many times, these terms have been used interchangeable, there are key differences. Wave is the platform while Analytics cloud is the product. A good analogy to draw is Sales Cloud and Service Cloud are products that run on the Salesforce 1 Platform. So let’s take a look at the features of each.

Wave

As the underlying technology platform, Wave is a schema free, non-relational database. Consisting of columnar based key-value pairs, Wave relies on search based technologies and inverted indexes to quickly deliver results. While that’s a lot of big data buzz words, what it means is Wave it not like your traditional data warehouse. It is built to handle large volumes of structured and unstructured data to quickly provide results based on search queries. If you would like to learn more, take a look at Redis, which a data structure server that will help you better understand Wave.

Analytics Cloud

As the flagship product on Wave, Salesforce Analytics Cloud is a mobile-first solution that allows data to be sourced from multiple locations, manipulated in real-time, and shared with other users. The key elements the Analytics Cloud delivers are the following:

- Explore – allows everyone, not just data analysts, to find answers in data

- Collaborate – one place for users to get and share answers on the business

- Cloud – as a native cloud solution, it is up and running quickly, easily scales and one source of the truth

- Mobile – all the answers you need in the palm of your hand

Learning More

To learn more about the Salesforce Analytics Cloud, check out the following resources. As part of a recent Lehigh Valley Salesforce Developer User Group, I explained the Salesforce Analytics Cloud, including a demonstration of the technologies. The presentation can be found on Slide Share while a recording of the session is available on YouTube.

Sunday, May 10, 2015

Loading Analytics Cloud Directly from Excel

Since my previous post on Combining Salesforce and External Data, it is now even easier to load the example sales data. With the release of Salesforce Wave Connector for Excel, no longer do you need to export data to a comma separated value (csv) file first. Let’s take a look at using the connector to directly populate Salesforce Analytics Cloud from our Excel file.

Salesforce Wave Connector foe Excel Installation

First, you want to add the Salesforce Wave Connector for Excel from the Office store, which is a free install. Alternatively, you can install from directly within Excel. Using Excel 2013, my steps were the following:

- Go to the Insert tab

- Click Apps for Office, See All, then Featured Apps

- Search for Wave Connector, Select the result and click Trust It

- A side-bar opens, and you supply your Microsoft account details

With the Wave Connector installed, it’s time to specify the Salesforce credentials to your Analytics Cloud environment. You must also grant permission to the Wave Connector, like any OAuth app. You can check out the help page if you need additional details on installing the connector.

Uploading Data from Excel

Now we need to specify the Excel data to load. Simply select a range of columns and rows and provide a name to use in Analytics Cloud for your dataset. You can also review the number of Columns and Rows (32,767 is the row limit per import) as well as preview the Column Names and Data types.

If you want to change a data type, look for a column that is hyperlinked. Clicking on it will cycle through the supported data types (text and date for dimensions and numeric for measures)

Then simply click submit data and the magic happens. The upload progress will be reported and a summary of columns and rows displayed. You can then jump to Analytics cloud or Import Another Dataset.

Success

Head over to your Analytics Cloud environment and look for your new dataset. You may have to refresh the page if it takes a few minutes for the import to complete. Our new SalesDataGenerator dataset is now ready to be analyzed through lenses and dashboards.

Additional Notes

- If you need to change Analytics Cloud environment, use the drop down next to your login in the Wave Connector for Excel to Log Out and supply new credentials

- Administrators, take note of additional steps if you are using Office 365, outlined in the help files

- Use the ? next to Get Started in the Wave Connector for Excel for additional details and troubleshooting tips. Excel in Office 365 users need to remember to Bind Current Selection when uploading data

- Remember you are limited to 20 datasets per day from external sources, and each upload from the Wave Connector for Excel counts towards this limit

Sunday, May 3, 2015

How to Combine Salesforce and External Data in Analytics Cloud

External Data

First, you want to load your external data from a CSV (comma-separated value) file by creating a new dataset. I have created a sample Sales Data file that contains sales by account broken down by product and time period. I have also included my Sales Data.json metadata file for reference.In this case, I did not have to make any changes from the system generated mappings. It is always a good idea to check your date format and values, as this is a common source of error. Now select Create Dataset and your Sales Data is in the Analytics Cloud.

You can then start exploring the data by creating Lenses and Dashboards. What if you want to see this data linked with your Account details from Salesforce in order to analyze by state or account type?

Salesforce Data

Loading Salesforce data is a snap using the Dataset Builder user interface. In this example, I am only interested in pulling Account information, to keep it simple. I am going to add just the Account object and a select fields to my dataset (Account ID, Account Name, Account Type, Industry and Region).Next, select Create Dataset, provide a Dataset Name and use Create to save. This will add the associate JSON entries to the dataflow for this dataset. The data will not be loaded until the next schedule run of the dataflow, currently set for once a day, or a manual run. Don’t start the dataflow yet, as we are going to have some fun first.

Dataflow JSON

We have avoided JSON manipulation so far, that that is about to change. You cannot combine external data with Salesforce data in the UI, but we can use previously loaded datasets in the dataflow. Start by downloading the dataflow json definition (SalesEdgeEltWorkflow.json) and open it in your preferred editor. I use JSON Editor, but would be willing to switch if you have suggestions on a better tool.Here is my dataflow JSON that shows the Account load.

"101_Load_Account": {

"action": "sfdcDigest",

"parameters": {

"object": "Account",

"fields": [

{

"name": "Id"

},

{

"name": "Name"

},

{

"name": "Type"

},

{

"name": "Industry"

},

{

"name": "Region__c"

}

]

}

},

"102_Register_Account": {

"action": "sfdcRegister",

"parameters": {

"source": "101_Load_Account",

"alias": "Account",

"name": "Account"

}

}

For simplicity, I have removed other flows for this example. By default, the workflow steps are labeled with numbers. I have added additional text to the name to help distinguish. Your dataflow JSON may look different, what is important is to find the Account load from Salesforce.

This will be a sfdcDigest, with an Object of Account and the fields we select. My is named 101_Load_Account. sfdcDigest will pull the data from Salesforce. Next, find the step with sfdcRegister action that takes has the same source name as the Account load. Mine is named 102_Register_Account, which makes the dataset accessible in the Analytics Cloud based on the name supplied.

Now, we are going to add a couple of additional steps. First, we need to add an edgemart transformation. This allows us to load a previously created dataset into the dataflow.

"200_Sales_Data": {

"action": "edgemart",

"parameters": {

"alias": "Sales_Data"

}

}

If you don’t recall the alias name of your dataset, you can always use the lens.apexp page in your org for reference - https://<pod>.salesforce.com/analytics/wave/web/lens.apexp. My step is 200_Sales_Data and my alias was Sales_Data. Now, the Sales Data file we loaded earlier is available within our data flow.

With the external data available, we can use an augment transformation to combine it with the account data. This is my step 201_Combine_Sales_Account with an augment action. We are going to make the Sales Data our left object and Account the right, specifying the join condition via left key (Account from the Sales file) and right key (Name from the Account file).

All of the columns from the Sales Data data set are automatically included, and we need to specify the columns to include from the right (Account data set) as well as specify the the relationship prefix name to use, Sales in this case.

"201_Combine_Sales_Account": {

"action": "augment",

"parameters": {

"relationship": "Sales",

"left_key": [

"Account"

],

"right_key": [

"Name"

],

"left": "200_Sales_Data",

"right": "101_Load_Account",

"right_select": [

"Id",

"Name",

"Type",

"Industry",

"Region__c"

]

}

},

"202_Register_Account_Sales_Dataset": {

"action": "sfdcRegister",

"parameters": {

"source": "201_Combine_Sales_Account",

"alias": "SalesWithAccounts",

"name": "Sales With Accounts"

}

}

Save the dataflow json file and go back to Data Monitor. Before you upload, you may want to save a backup of the previous dataflow in case you want to revert to your original dataflow. Then, Upload the dataflow json file and start the data flow. Once the dataflow finishes without errors, you have a brand new dataset of external and Salesforce Data.

Final Touches

With our new dataset, we can put together lenses and dashboards of sales activity, over time by various account attributes such as account type, key status, etc.

With the ability to load edgemarts into dataflows, we are not limited to combining Salesforce and external data. We could also load two sets of external data, load both into the dataflow and combine them into one final dataset. A very powerful feature of Analytics Cloud.

External Data Generation Notes

The Account Names are specific to my environment and may not match yours. I created a simple Sales Data Generator Excel Template that will generate random, sample data. Simply replace the Account Name on the Account tab. If you want to match on a different key to Salesforce, replace the values in Account Name with the desired data. Adding additional columns to the right of Account Name is fine.

The Dynamic tab uses a random number between one and the number of rows with a Row ID to find an Account. You can add more or fewer Accounts, just be sure to have Row ID values for valid rows. The same approach is used for Product.

The period is generated as a random date between a Start and End date specified on the Control Tab. To change the date range, simply enter the desired start and end date. Similarly, Price is selected between the Low and High Price on the control tab. Change the Control values for a different price range.

Finally, as the Dynamic tab changes on each change, you will want to create a static copy of the data for repeatability. Copy the columns from Dynamic and then head to the Sales tab. Perform a Paste Special of Values with Number Formatting. Now you can save the tab as Sales_Data.CSV and you are ready to go with your own custom data.