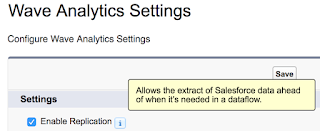

Salesforce Analytics Cloud includes a replication feature that will pre-populate Wave with Salesforce data. Replicated data can greatly improve dataflow execution times.

Enabling replication also consolidates multiple digest statements in dataflows into a single extract. If you have used the Dataset builder and selected the same object more than once, your dataflow will digest that object multiple times.

Simply check the option and away you go....well almost. There are a few considerations to take into account when using replicated data. Here's what to keep in mind as well as a list of my Tips & Tricks.

How Replication Works

Before diving into Replication, let's examine how Wave pulls data from Salesforce. Each digest node in a dataflow pulls the data from Salesforce using the Bulk API. You can easily verify this by checking the Bulk Data Load Job status. You will see a job for each object, executed by the Integration User.

Now the Bulk API is awesome when working with large sets of data. Pulling out hundred of thousand or millions rows of data is not a problem. However, to support large data volumes, the Bulk API operates asynchronously. This means your request can queue for a while based on overall Salesforce workload. If you have ever wondered why dataflow execution job time can vary, the Bulk API plays a large part.

What Happens When You Enable Replication

When you enable replication, all your scheduled data flows are examined. Each sfdcDigetst node is reviewed to see which fields are being used. Then replication is enabled for each object, for the fields you are using.

If you have multiple dataflows set up, replication will merge the sfdcDigest nodes. All the fields for each object across all the dataflows are included in replication. If there are conflicts with object level parameters across the dataflows, the values from the most recently created dataflow are used. This includes attributes like fiscal offsets for dates or complex filters.

You will also have an Ingest node in Data Manager to control the replication details. From here, you can view the details of a replication object, change the replication schedule or even run the replication.

Why Enable Replication

So now that we understand how replication works, why should we enable it? First, replication improves dataflow performance. The data is preloaded into Wave and ready to use when you need it. In addition, as replication pulls changes incrementally, there is less data being pulled out of Salesforce and flowing into Wave each time.

A second benefit with replication is efficiency. When enabling replication, multiple digest nodes of the same object are consolidated into one. It's easy to build datasets with Dataset builder and end up pulling the same object multiple times. For example, join Cases to Accounts, then Opportunities to Accounts and finally Orders to Accounts. Now your Account object is being pulled three times. Once you enable replication, the Account object is only pulled once.

I have seen significant performance improvements after enabling replication. In one of my orgs, the dataflow took between 15-18 minutes to execute. While this dataflow pulled around a dozen objects from Salesforce there were only a few hundred rows of data total. The overhead of waiting for the Bulk API to execute asynchronously contributed to most of the execution time.

Once I enabled replication, the dataflow execution time dropped to between 3-5 minutes. While there is still execution time for the replicated data to preload to Wave, it does so on scheduled basis. As adding dataflow transformations is a trial and error process, not having to wait for the data to extract from Salesforce to find out my computeExpression has a typo has been a huge time saver.

A second benefit with replication is efficiency. When enabling replication, multiple digest nodes of the same object are consolidated into one. It's easy to build datasets with Dataset builder and end up pulling the same object multiple times. For example, join Cases to Accounts, then Opportunities to Accounts and finally Orders to Accounts. Now your Account object is being pulled three times. Once you enable replication, the Account object is only pulled once.

I have seen significant performance improvements after enabling replication. In one of my orgs, the dataflow took between 15-18 minutes to execute. While this dataflow pulled around a dozen objects from Salesforce there were only a few hundred rows of data total. The overhead of waiting for the Bulk API to execute asynchronously contributed to most of the execution time.

Once I enabled replication, the dataflow execution time dropped to between 3-5 minutes. While there is still execution time for the replicated data to preload to Wave, it does so on scheduled basis. As adding dataflow transformations is a trial and error process, not having to wait for the data to extract from Salesforce to find out my computeExpression has a typo has been a huge time saver.

Tips & Tricks

After working with Replication, here’s my list of tips and tricks to consider.

- After enabling replication from the Settings menu, be sure to Run Replication Now from the Ingest menu in Wave Data Manager. If replication has not been run, a "Replicated dataset was not found." error will occur.

- When Enabling Replication, only scheduled dataflows are reviewed for the objects and fields to replicate. If you run a dataflow that was not schedule and it uses objects or fields not in the other dataflow(s), the Replicated dataset or fields may not be found.

- To resolve, schedule the dataflow which will update the replicated objects

- Run Replication Now

- Unschedule the dataflow.

- When you unschedule the dataflow, the objects and fields are not removed from replication.

- Keep in mind any additional changes to the dataflow will not be reflected in the replication

- When adding fields or objects to a scheduled dataflow, the items are automatically added to the replication process. However, the replication must be run in order for the dataflow to execute successfully.

- When scheduling your replication, keep in mind that is should occur before the dataflow schedule. Be sure to allot enough time for the replication to complete before a dataflow starts.

- Uploading a new dataflow may take longer when replication in enabled. The upload process needs to examine the updated dataflow and ensure all objects and fields are included in the replication.

- If you remove a field from a Salesforce object that is part of replication, the replication will fail. Head to the Ingest tab in Data Manager. Click on the replicated object. You will receive an error about a the field being unavailable. Click through the message, find the field name and click the X in the column to remove. Save the Replication Settings and Run the Replication.

- Similarly, if you remove an object or field from dataflow it is not removed from replication. You can manually remove the object or field from the Ingest tab in Data Manager.

- Keep in mind the replication run counts against your daily dataflow execution limit. I believe future release will remove replication counting towards the limit.

- While you current daily dataflow execution limit is 24 (success or failure), runs under 1 minutes in duration do not count towards the limit. Future releases may increase this limit.

- Enabling Replication also enables the ability to create multiple dataflows. Use the Create button from the Dataflow view of the Monitor tab in Data Manager. This can be useful to segment different logical data load processes. You can create up to 10 dataflows. However, keep in mind each dataflow execution counts towards the daily limit.

- Replicated Object Color Coding

- Green = success

- Orange = replication settings have change, run replication

- Blue = replication has not been run

- Red = Replication failure

- If you do not want to extract data incrementally, you can change it in the dataflow digest node

Hi Carl,

ReplyDeleteHow would you go about checking for duplicates and removing them in wave? Is there any way I can do that in wave??